Integrating with Amazon RDS — Full RDS Scan

Learn how to export an RDS scan to an Amazon S3 bucket so it can be scanned by Nightfall.

In order to scan an Amazon database instance (i.e. mySQL, Postgres) you must create a snapshot of that instance and export the snapshot to S3.

The export process runs in the background and doesn't affect the performance of your active DB instance. Exporting RDS snapshots can take a while depending on your database type and size

Once the snapshot has been exported you will be able to scan the resulting parquet files with Nightfall like other file. You can do this using our endpoints for uploading files or using our Amazon S3 Python integration.

Prerequisites

In addition to having created your RDS instance, you will need to define the following in order to export your snapshots so they can later be scanned by Nightfall:

An Amazon S3 bucket

In order to perform this scan, you will need to configure an Amazon S3 bucket to which you will export a snapshot.

S3 Bucket Requirements

This bucket must have snapshot permissions and the bucket to export must be in the same AWS Region as the the snapshot being exported.

If you have not already created a designated S3 bucket, in the AWS console select Services > Storage > S3

Click the "Create bucket" button and give your bucket a unique name as per the instructions.

For more information please see Amazon's documentation on identifying an Amazon S3 bucket for export.

IAM Role

You will need an Identity and Access Management (IAM) Role in order to perform the transfer for a snapshot to your S3 bucket.

This role may be defined at the time of backup and it will be given the proper specific permissions.

You may also create the role under Services > Security, Identity, & Compliance > IAM and select “Roles” from under the “Access management” section of the left hand navigation.

From there you can click the “Create role” button and create a role where “AWS Service” is the trusted entity type.

For more information see Identity and access management in Amazon RDS and Providing access to an Amazon S3 bucket using an IAM role

AWS KMS Key

You will be required to create a symmetric encryption AWS Key using the Key Management Service (KMS).

From your AWS console, select the Services > Security, Identity, & Compliance > Key Management Service from the adjacent submenu.

From there you can click the “Create key” button and follow the instructions.

Walkthrough

To do this task manually, go to Amazon RDS Service (Services > Database > RDS) and select the database to export from your list of databases.

Select the “Maintenance & backups” tab. Go to the “Snapshots” section.

You can select an existing automated snapshot or manually create a new snapshot with the “Take snapshot” button

Once the snapshot is complete, click the snapshot’s name.

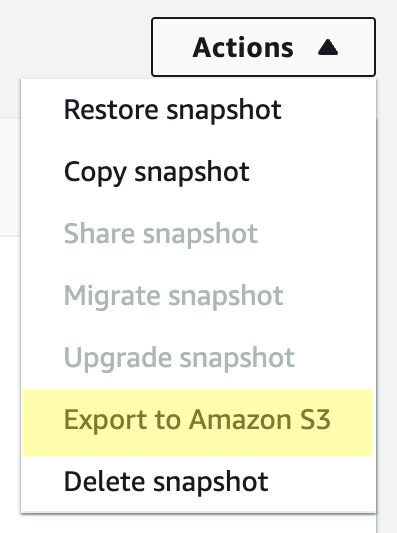

From the “Actions” menu in the upper right select “Export to Amazon S3"

- Enter a unique export identifier

- Choose whether you want to export all or part of your data (You will be exporting to Parquet)

- Choose the S3 bucket

- Choose or create your designated IAM role for backup

- Choose your AWS KMS Key

- Click the Export button

Once the Status column of export is "Complete", you can click the link to the export under the S3 bucket column.

Within the export in the S3 bucket, you will find a series of folders corresponding to the different database entities that were exported.

Exported data for specific tables is stored in the format base_prefix/files, where the base prefix is the following:

export_identifier/database_name/schema_name.table_name/

For example:

export-1234567890123-459/rdststdb/rdststdb.DataInsert_7ADB5D19965123A2/

The current convention for file naming is as follows:

partition_index/part-00000-random_uuid.format-based_extension

For example:

1/part-00000-c5a881bb-58ff-4ee6-1111-b41ecff340a3-c000.gz.parquet

2/part-00000-d7a881cc-88cc-5ab7-2222-c41ecab340a4-c000.gz.parquet

3/part-00000-f5a991ab-59aa-7fa6-3333-d41eccd340a7-c000.gz.parquet

You may download these parquet files and upload them to Nightfall to scan as you would any other parquet file.

Obtaining file size

You can obtain the value for

fileSizeBytesyou can run the commandwc -c

#Start the upload

curl --location --request POST 'https://api.nightfall.ai/v3/upload' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer NF-<Your API Key>' \

--data-raw '{

"fileSizeBytes": <Your File Size>,

"mimeType" : "application/zip"

}'

#Resulting payload

{"id":"<Your File Upload ID>","fileSizeBytes":3693,"chunkSize":10485760,"mimeType":"application/zip"}

#Post the file using the 'id' from the returned payload in your path

curl --location --request PATCH 'https://api.nightfall.ai/v3/upload/<Your File Upload ID>' \

--header 'X-Upload-Offset: 0' \

--header 'Content-Type: application/octet-stream' \

--header 'Authorization: Bearer NF-<Your API Key>' \

--data-binary '@///Users/myuser/yourfilepath/userdata1.parquet'

#Finish the upload

curl --location --request POST 'https://api.nightfall.ai/v3/upload/<Your File Upload ID>/finish' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer NF-<Your API Key>'

# Scan the file using an alert config

curl --request POST \

--url https://api.nightfall.ai/v3/upload/<Your File Upload ID>/scan \

--header 'Accept: application/json' \

--header 'Authorization: Bearer NF-<Your API Key>' \

--header 'Content-Type: application/json' \

--data '

{

"policy": {

"detectionRuleUUIDs": [

"<Your detection Rule UUID>"

],

"alertConfig": {

"email": {

"address": "<Your Email Address>"

}

}

},

"requestMetadata": "scan of parquet file"

}

'

In the above sequence of curl invocations, we upload the file and then initiate the file scan with a policy that uses pre-configured detection rule as well as an alertConfig that send the results to an email address.

Note that results you receive in this case will be an attachment with a JSON payload as follows:

{

"errors":null,

"findingsPresent":true,

"findingsURL":"https://files.nightfall.ai/18b8b6b8-59c9-4891-9f92-9c357acc19cd.json?Expires=1655306887&Signature=ASDFcCfQpEush3QrWmdZX9A9RePQjNHZTfRkBlgPwdPf~RcNnPYgYzt3G4AAzkI8IDbUdc4CzBbAROTx0oYOtNODCTdoKHKB7Q0a7~hzRNx3BHYHH1msdhkS1qTl3z82RCh6DZi~nk~Oa~yt-XZvAf3ui4MyNU0wyfqjbKO9o79Ec9YWqMdUmTOP1Ss39YmA71e6ky0VOdjdN4baoQV5VElTQ1rHrkdgYHz-95Dnzd3YK3IxGQR92AU7KA3X-rrcmpIJwMUIJSsl8~or0WIg5ar4U9Ood1BFSE~GmlQsKclEo1LEaX2KclWaQtjmN9~3IQnxOmkhPeAhEt-5n~Hbug__&Key-Pair-Id=ASDFOPZ1EKX0YC",

"requestMetadata":"scan of go sdk for URLs sdk",

"uploadID":"18b8b6b8-59c9-4891-9f92-9c357acc19cd",

"validUntil":"2022-06-15T15:28:07.86163221Z"

}

The findings themselves will be available at the URL specified in findingsURL until the date-time stamp contained in the validUntil property.

When parquet files are analyzed, as with other tabular data, not only will the the location of the finding be shown within a given byte range, but also column and row data as well.

Below is a SQL script small table of generated data containing example personal data, including phone numbers and email addresses.

DROP TABLE IF EXISTS `myTable`;

CREATE TABLE `myTable` (

`id` mediumint(8) unsigned NOT NULL auto_increment,

`name` varchar(255) default NULL,

`phone` varchar(100) default NULL,

`email` varchar(255) default NULL,

`address` varchar(255) default NULL,

`postalZip` varchar(10) default NULL,

`region` varchar(50) default NULL,

`country` varchar(100) default NULL,

`alphanumeric` varchar(255),

`text` TEXT default NULL,

PRIMARY KEY (`id`)

) AUTO_INCREMENT=1;

INSERT INTO `myTable` (`name`,`phone`,`email`,`address`,`postalZip`,`region`,`country`,`alphanumeric`,`text`)

VALUES

("Malcolm Mcgee","1-831-777-4886","[email protected]","109-1617 Augue Av.","873766","Delta","Ukraine","HPJ88FSI6HJ","in faucibus orci luctus et ultrices posuere cubilia Curae Phasellus"),

("Harrison Dudley","(645) 987-7967","[email protected]","P.O. Box 311, 4823 Odio Street","81398-37524","Nordland","Germany","INL95CND6TF","egestas nunc sed libero. Proin sed turpis nec mauris blandit"),

("Driscoll Callahan","1-598-623-3631","[email protected]","689-226 Eu St.","4534 JV","California","Chile","QQI55BTP0CS","velit dui, semper et, lacinia vitae, sodales at, velit. Pellentesque"),

("Anne Rollins","(558) 943-1159","[email protected]","5361 Enim, Street","176814","Minas Gerais","Brazil","EVO25RST5RM","cursus vestibulum. Mauris magna. Duis dignissim tempor arcu. Vestibulum ut"),

("Noah Townsend","(514) 311-3416","[email protected]","797-7375 Consectetuer Ave","5177","Tây Ninh","Chile","CNG87EJF4EK","libero at auctor ullamcorper, nisl arcu iaculis enim, sit amet");

Below is an example finding when a scan is done of the resulting parquet exported to S3 where the Detection Rule use Nightfall's built in Detectors for matching phone numbers and emails. In this example shows a match in the 1st row and and 4th column. This is what we would expect based on our table structure.

{

"findings":[

{

"detector":{

"id":"89f810aa-64a5-4269-b0a0-110d250d55ee",

"name":"email address"

},

"finding":"[email protected]",

"confidence":"LIKELY",

"location":{

"byteRange":{

"start":36,

"end":51

},

"codepointRange":{

"start":36,

"end":51

},

"lineRange":{

"start":1,

"end":1

},

"rowRange":{

"start":1,

"end":1

},

"columnRange":{

"start":4,

"end":4

},

"commitHash":""

},

"matchedDetectionRuleUUIDs":[

"950833c9-8608-4c66-8a3a-0734eac11157"

],

"matchedDetectionRules":[

]

},

Likewise it also finds phone numbers in the 3rd column.

{

"detector":{

"id":"d08edfc4-b5e2-420a-a5fe-3693fb6276c4",

"name":"Phone number",

"version":1

},

"finding":"514) 311-3416",

"confidence":"POSSIBLE",

"location":{

"byteRange":{

"start":814,

"end":827

},

"codepointRange":{

"start":814,

"end":827

},

"lineRange":{

"start":5,

"end":5

},

"rowRange":{

"start":5,

"end":5

},

"columnRange":{

"start":3,

"end":3

},

"commitHash":""

},

"matchedDetectionRuleUUIDs":[

"950833c9-8608-4c66-8a3a-0734eac11157"

],

"matchedDetectionRules":[

]

}

You may also use our tutorial for Integrating with Amazon S3 (Python) to scan through the S3 objects.

For more information please see the Amazon documentation Exporting DB snapshot data to Amazon S3

Updated 7 months ago